E(n) Equivariant Graph Neural Networks

Victor Garcia Satorras$^{1}$, Emiel Hoogeboom$^{1}$, Max Welling$^{1}$

$^{1}$UvA-Bosch Delta Lab, University of Amsterdam, Netherlands.

Correspondence to: Victor Garcia Satorras [email protected], Emiel Hoogeboom [email protected], Max Welling [email protected].

Proceedings of the $38^{\text{th}}$ International Conference on Machine Learning, PMLR 139, 2021. Copyright 2021 by the author(s).

Abstract

This paper introduces a new model to learn graph neural networks equivariant to rotations, translations, reflections and permutations called $\mathrm{E}(n)$-Equivariant Graph Neural Networks (EGNNs). In contrast with existing methods, our work does not require computationally expensive higher-order representations in intermediate layers while it still achieves competitive or better performance. In addition, whereas existing methods are limited to equivariance on $3$ dimensional spaces, our model is easily scaled to higher-dimensional spaces. We demonstrate the effectiveness of our method on dynamical systems modelling, representation learning in graph autoencoders and predicting molecular properties.

Executive Summary: This paper tackles a key challenge in applying deep learning to scientific data like molecular structures, particle simulations, and 3D point clouds: these datasets often have inherent symmetries, such as rotations, translations, reflections, and node permutations, that standard neural networks ignore, leading to inefficient or inaccurate models. Exploiting these symmetries through equivariance—where the model's output transforms in the same way as the input under such changes—can improve performance and data efficiency, especially in fields like chemistry, physics, and materials science where precise predictions of properties or dynamics are critical. This is timely as growing datasets demand scalable methods that reduce computational costs without sacrificing accuracy.

The work introduces and evaluates E(n)-Equivariant Graph Neural Networks (EGNNs), a new architecture designed to process graph-structured data (nodes with features and connections) while preserving equivariance to Euclidean transformations in any dimension n and permutations of nodes. In simple terms, EGNNs extend standard graph neural networks by incorporating coordinate updates based on relative distances between nodes, ensuring the model respects spatial symmetries without complex intermediate representations.

The authors developed EGNNs as a modular layer that alternates between updating node features via message passing and adjusting node positions along radial directions, using multi-layer perceptrons for learnable functions. They tested it on three tasks using simulated and benchmark data: predicting trajectories in a 3D charged-particle system (3,000 training trajectories of 1,000 timesteps each), unsupervised graph reconstruction via autoencoders (5,000 synthetic graphs with 7–20 nodes), and estimating 12 chemical properties of molecules from the QM9 dataset (100,000 training molecules with up to 29 atoms). Comparisons involved baselines like standard graph neural networks, radial fields, tensor field networks, and SE(3) transformers, with models trained over thousands of epochs using common optimizers and activations. Key assumptions included fully connected graphs or inferred edges for scalability, and focus on scalar and vector inputs without higher-order tensors.

The most significant findings show EGNNs consistently outperform or closely match competitors. First, in dynamical systems, EGNNs reduced position prediction error by 32% compared to the next-best method (radial fields) and were over 10 times faster than spherical-harmonic-based models, while being more data-efficient—outperforming non-equivariant networks with as few as 100 samples by leveraging symmetries to avoid learning redundant transformations. Second, for graph autoencoders, EGNNs achieved near-perfect reconstruction (0.06–0.11% edge error) on synthetic datasets, solving symmetry issues in featureless graphs where standard models failed, even when overfitting small sets of 100 graphs. Third, on QM9 molecular properties, EGNNs yielded the lowest or near-lowest mean absolute errors across tasks (e.g., 0.029 Debye for dipole moments, versus 0.030–0.064 for others), rivaling advanced models without needing expensive computations. Overall, EGNNs scaled efficiently to higher dimensions and ran in milliseconds per batch on standard GPUs.

These results imply that simple relative-distance features suffice to capture geometric symmetries in many tasks, challenging the need for computationally heavy higher-order representations like spherical harmonics, which are limited to 3D anyway. This lowers barriers for applications: in drug discovery, EGNNs could speed up property predictions to screen candidates faster, reducing costs; in simulations, they enhance accuracy for control systems or reinforcement learning with less data and risk of overfitting to specific orientations. Unlike expectations from prior work, EGNNs show equivariance boosts generalization even in invariant tasks like property estimation, as proven geometrically—point configurations are uniquely identified by pairwise distances up to transformations.

Adopt EGNNs as a default for symmetry-aware graph tasks, integrating them into pipelines for molecular modeling or 3D vision; for instance, use the velocity-extended version for dynamic predictions and the edge-inference variant for sparse data. Next, pilot integrations in real-world tools like protein-folding simulators, comparing against domain-specific baselines, and explore hybrids with attention mechanisms for larger graphs. If scaling to millions of nodes, test sparse implementations to manage memory.

While robust across benchmarks, limitations include reliance on full message passing (potentially inefficient for very large graphs without sparsity tweaks) and focus on basic input types, which may underperform where angular features matter. Confidence is high in the core claims, backed by rigorous proofs and multiple datasets, but caution is advised for non-Euclidean symmetries or noisy real-world data, where additional validation via larger pilots would strengthen applicability.

1. Introduction

Section Summary: Deep learning has advanced by incorporating built-in assumptions about problem symmetries, like how images shift or graphs rearrange, to make models more efficient and accurate. Many real-world challenges, such as analyzing point clouds, molecules, or particle simulations, involve three-dimensional symmetries like translations, rotations, and reflections, and recent techniques aim to make neural networks handle these transformations without losing performance, though they often rely on complex computations for higher-level data representations. This paper introduces a simpler neural network design that achieves these symmetries across dimensions, skips costly elements like spherical harmonics, and delivers top or near-top results in tasks like simulating dynamics, learning graph representations, and predicting molecular properties.

Although deep learning has largely replaced hand-crafted features, many advances are critically dependent on inductive biases in deep neural networks. An effective method to restrict neural networks to relevant functions is to exploit the symmetry of problems by enforcing equivariance with respect to transformations from a certain symmetry group. Notable examples are translation equivariance in Convolutional Neural Networks and permutation equivariance in Graph Neural Networks [1, 2, 3].

Many problems exhibit 3D translation and rotation symmetries. Some examples are point clouds [4], 3D molecular structures [5] or N-body particle simulations [6]. The group corresponding to these symmetries is named the Euclidean group: SE(3) or when reflections are included E(3). It is often desired that predictions on these tasks are either equivariant or invariant with respect to E(3) transformations.

Recently, various forms and methods to achieve E(3) or SE(3) equivariance have been proposed [7, 8, 9, 10]. Many of these works achieve innovations in studying types of higher-order representations for intermediate network layers. However, the transformations for these higher-order representations require coefficients or approximations that can be expensive to compute. Additionally, in practice for many types of data the inputs and outputs are restricted to scalar values (for instance temperature or energy, referred to as type- $0$ in literature) and 3d vectors (for instance velocity or momentum, referred to as type- $1$ in literature).

In this work we present a new architecture that is translation, rotation and reflection equivariant ($\mathrm{E}(n)$), and permutation equivariant with respect to an input set of points. Our model is simpler than previous methods in that it does not require the spherical harmonics as in [7, 8] while it can still achieve competitive or better results. In addition, equivariance in our model is not limited to the 3-dimensional space and can be scaled to larger dimensional spaces without a significant increase in computation.

We evaluate our method in modelling dynamical systems, representation learning in graph autoencoders and predicting molecular properties in the QM9 dataset. Our method reports the best or very competitive performance in all three experiments.

2. Background

Section Summary: This background section explains equivariance, a property where a function transforms its input in a way that consistently affects the output, such as shifting, rotating, or rearranging a set of points or particles without changing the underlying relationships. It covers three key types: translation equivariance, where moving all points equally shifts the result the same way; rotation and reflection equivariance, where turning or flipping the input does the same to the output; and permutation equivariance, where reordering points just reorders the output accordingly. It also describes graph neural networks, which process data structured as connected nodes and edges, using layers that exchange information between neighbors to update node features while maintaining permutation equivariance.

In this section we introduce the relevant materials on equivariance and graph neural networks which will later complement the definition of our method.

2.1. Equivariance

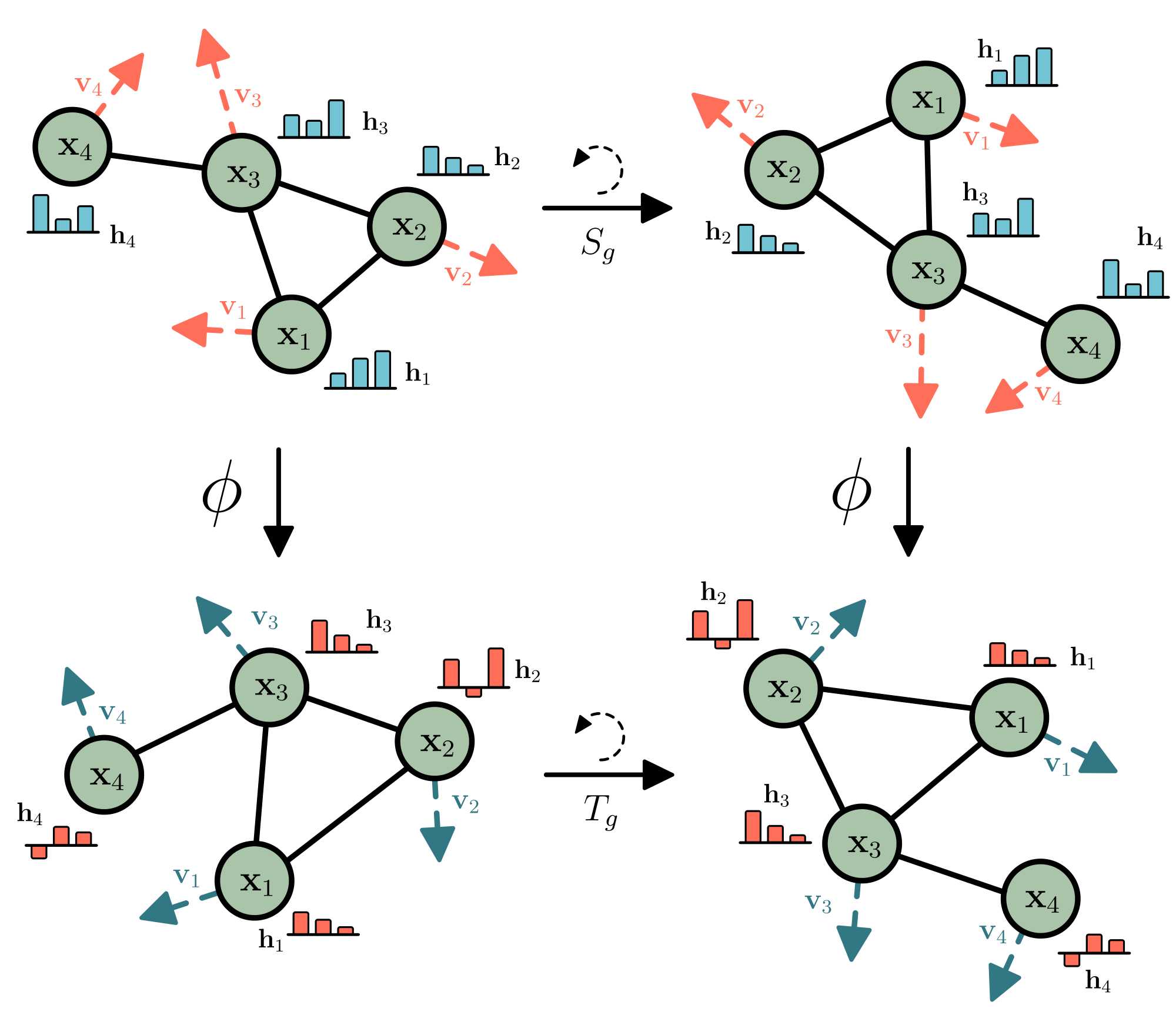

Let $T_g: X \xrightarrow{} X$ be a set of transformations on $X$ for the abstract group $g \in G$. We say a function $\phi: X \xrightarrow{} Y$ is equivariant to $g$ if there exists an equivalent transformation on its output space $S_g: Y \xrightarrow{} Y$ such that:

$ \phi(T_g(\mathbf{x})) = S_g(\phi(\mathbf{x}))\tag{1} $

As a practical example, let $\phi(\cdot)$ be a non-linear function, $\mathbf{x} = (\mathbf{x}_1, \dots, \mathbf{x}_M) \in \mathbb{R}^{M \times n}$ an input set of $M$ point clouds embedded in a $n$-dimensional space, $\phi(\mathbf{x}) = \mathbf{y} \in \mathbb{R}^{M \times n}$ the transformed set of point clouds, $T_g$ a translation on the input set $T_g(\mathbf{x}) = \mathbf{x} + g$ and $S_g$ an equivalent translation on the output set $S_g(\mathbf{y}) = \mathbf{y} + g$. If our transformation $\phi: X \xrightarrow{} Y$ is translation equivariant, translating the input set $T_g(\mathbf{x})$ and then applying the function $\phi(T_x(\mathbf{x}))$ on it, will deliver the same result as first running the function $y=\phi(\mathbf{x})$ and then applying an equivalent translation to the output $T_g(\mathbf{y})$ such that Equation 1 is fulfilled and $\phi(\mathbf{x} + g) = \phi(\mathbf{x}) + g$. In this work we explore the following three types of equivariance on a set of particles $\mathbf{x}$:

- Translation equivariance. Translating the input by $g \in \mathbb{R}^n$ results in an equivalent translation of the output. Let $\mathbf{x} + g$ be shorthand for $(\mathbf{x}_1 + g, \ldots, \mathbf{x}_M + g)$. Then $\mathbf{y} + g = \phi(\mathbf{x} + g)$

- Rotation (and reflection) equivariance. For any orthogonal matrix $Q \in \mathbb{R}^{n \times n}$, let $Q \mathbf{x}$ be shorthand for $(Q \mathbf{x}_1, \ldots, Q \mathbf{x}_M)$. Then rotating the input results in an equivalent rotation of the output $Q \mathbf{y} = \phi(Q \mathbf{x})$.

- Permutation equivariance. Permuting the input results in the same permutation of the output $P(\mathbf{y}) = \phi(P(\mathbf{x}))$ where $P$ is a permutation on the row indexes.

Note that velocities $\mathbf{v} \in \mathbb{R}^{M \times n}$ are unaffected by translations, but they transform equivalently under rotation (2) and permutation (3). Our method introduced in Section 3 will satisfy the three above mentioned equivariant constraints.

2.2. Graph Neural Networks

Graph Neural Networks are permutation equivariant networks that operate on graph structured data [1, 2, 3]. Given a graph $\mathcal{G} = (\mathcal{V}, \mathcal{E})$ with nodes $v_i \in \mathcal{V}$ and edges $e_{ij} \in \mathcal{E}$ we define a graph convolutional layer following notation from [11] as:

$ \begin{aligned} \mathbf{m}_{ij} &= \phi_e(\mathbf{h}i^l, \mathbf{h}j^l, a{ij}) \ \mathbf{m}i &= \sum{j \in \mathcal{N}(i)} \mathbf{m}{i j} \ \mathbf{h}_i^{l+1} &= \phi_h(\mathbf{h}_i^l, \mathbf{m}_i) \ \end{aligned}\tag{2} $

Where $\mathbf{h}i^l \in \mathbb{R}^{\text{nf}}$ is the nf-dimensional embedding of node $v_i$ at layer $l$. $a{ij}$ are the edge attributes. $\mathcal{N}(i)$ represents the set of neighbors of node $v_i$. Finally, $\phi_e$ and $\phi_h$ are the edge and node operations respectively which are commonly approximated by Multilayer Perceptrons (MLPs).

3. Equivariant Graph Neural Networks

Section Summary: Equivariant Graph Neural Networks (EGNNs) extend standard graph neural networks by incorporating spatial coordinates for nodes, allowing the model to handle geometric data like particle positions while preserving symmetries such as rotations, translations, and node permutations. The core layer, called the Equivariant Graph Convolutional Layer, updates node features and coordinates by processing edge messages that include relative distances between points, ensuring the outputs remain consistent under geometric transformations. This design maintains equivariance to Euclidean group symmetries, and the model can be adapted to track additional properties like particle momentum for more detailed simulations.

In this section we present Equivariant Graph Neural Networks (EGNNs). Following the notation from background Section 2.2, we consider a graph $\mathcal{G} = (\mathcal{V}, \mathcal{E})$ with nodes $v_i \in \mathcal{V}$ and edges $e_{ij} \in \mathcal{E}$. In addition to the feature node embeddings $\mathbf{h}_i \in \mathbb{R}^{\text{nf}}$ we now also consider a $n$-dimensional coordinate $\mathbf{x}_i \in \mathbb{R}^{\text{n}}$ associated with each of the graph nodes. Our model will preserve equivariance to rotations and translations on these set of coordinates $\mathbf{x}_i$ and it will also preserve equivariance to permutations on the set of nodes $\mathcal{V}$ in the same fashion as GNNs.

Our Equivariant Graph Convolutional Layer (EGCL) takes as input the set of node embeddings $\mathbf{h}^l ={\mathbf{h}0^l, \dots, \mathbf{h}{M-1}^l}$, coordinate embeddings $\mathbf{x}^l ={\mathbf{x}0^l, \dots, \mathbf{x}{M-1}^l}$ and edge information $\mathcal{E}=(e_{ij})$ and outputs a transformation on $\mathbf{h}^{l+1}$ and $\mathbf{x}^{l+1}$. Concisely: $\mathbf{h}^{l+1}, \mathbf{x}^{l+1} = \text{EGCL}[\mathbf{h}^{l}, \mathbf{x}^{l}, \mathcal{E}]$. The equations that define this layer are the following:

$ \begin{align} \mathbf{m}{ij} &=\phi{e}\left(\mathbf{h}{i}^{l}, \mathbf{h}{j}^{l}, \left| \mathbf{x}{i}^{l}- \mathbf{x}{j}^{l}\right|^{2}, a_{i j}\right) \tag{3} \ \mathbf{x}{i}^{l+1} &= \mathbf{x}{i}^{l}+ C\sum_{j \neq i}\left(\mathbf{x}{i}^{l}- \mathbf{x}{j}^{l}\right) \phi_{x}\left(\mathbf{m}{ij}\right) \tag{4} \ \mathbf{m}{i} &=\sum_{j \neq i} \mathbf{m}{ij} \tag{5} \ \mathbf{h}{i}^{l+1} &=\phi_{h}\left(\mathbf{h}{i}^l, \mathbf{m}{i}\right) \tag{6} \end{align} $

Notice the main differences between the above proposed method and the original Graph Neural Network from equation 2 are found in equations 3 and 4. In equation 3 we now input the relative squared distance between two coordinates $|\mathbf{x}{i}^{l}-\mathbf{x}{j}^{l}|^{2}$ into the edge operation $\phi_e$. The embeddings $\mathbf{h}i^l$, $\mathbf{h}j^l$, and the edge attributes $a{ij}$ are also provided as input to the edge operation as in the GNN case. In our case the edge attributes will incorporate the edge values $a{ij} = e_{ij}$, but they can also include additional edge information.

In Equation 4 we update the position of each particle $\mathbf{x}i$ as a vector field in a radial direction. In other words, the position of each particle $\mathbf{x}i$ is updated by the weighted sum of all relative differences $(\mathbf{x}i - \mathbf{x}j){\forall j}$. The weights of this sum are provided as the output of the function $\phi_x: \mathbb{R}^{\text{nf}} \rightarrow \mathbb{R}^1$ that takes as input the edge embedding $\mathbf{m}{ij}$ from the previous edge operation and outputs a scalar value. $C$ is chosen to be $1/(M-1)$, which divides the sum by its number of elements. This equation is the main difference of our model compared to standard GNNs and it is the reason why equivariances 1, 2 are preserved (proof in Appendix A). Despite its simplicity, this equivariant operation is very flexible since the embedding $\mathbf{m}{ij}$ can carry information from the whole graph and not only from the given edge $e{ij}$.

Finally, equations 5 and 6 follow the same updates than standard GNNs. Equation 5 is the aggregation step, in this work we choose to aggregate messages from all other nodes $j\neq i$, but we could limit the message exchange to a given neighborhood $j \in \mathcal{N}(i)$ if desired in both equations 5 and 4. Equation 6 performs the node operation $\phi_h$ which takes as input the aggregated messages $\mathbf{m}_i$, the node emedding $\mathbf{h}_i^{l}$ and outputs the updated node embedding $\mathbf{h}_i^{l+1}$.

3.1. Analysis on E(n) equivariance

In this section we analyze the equivariance properties of our model for $E(3)$ symmetries (i.e. properties 1 and 2 stated in Section 2.1). In other words, our model should be translation equivariant on $\mathbf{x}$ for any translation vector $g \in \mathbb{R}^n$ and it should also be rotation and reflection equivariant on $\mathbf{x}$ for any orthogonal matrix $Q \in \mathbb{R}^{n \times n}$. More formally our model satisfies:

$ Q \mathbf{x}^{l+1} + g, \mathbf{h}^{l+1} = \mathrm{EGCL}(Q \mathbf{x}^l + g, \mathbf{h}^l) $

We provide a formal proof of this in Appendix A. Intuitively, let's consider a $\mathbf{h}^l$ feature which is already $\mathrm{E}(n)$ invariant, then we can see that the resultant edge embedding $\mathbf{m}{ij}$ from Equation 3 will also be $\mathrm{E}(n)$ invariant, because in addition to $\mathbf{h}^l$, it only depends on squared distances $| \mathbf{x}{i}^l- \mathbf{x}_{j}^l|^{2}$, which are $\mathrm{E}(n)$ invariant. Next, Equation 4 computes $\mathbf{x}^{l+1}_i$ by a weighted sum of differences $(\mathbf{x}_i - \mathbf{x}_j)$ which is added to $\mathbf{x}i$, this transforms as a type-1 vector and preserves equivariance (see Appendix A). Finally the last two equations 5 and 6 that generate the next layer node-embeddings $\mathbf{h}^{l+1}$ remain $\mathrm{E}(n)$ invariant since they only depend on $\mathbf{h}^l$ and $\mathbf{m}{ij}$ which, as we saw above, are $\mathrm{E}(n)$ invariant. Therefore the output $\mathbf{h}^{l+1}$ is $\mathrm{E}(n)$ invariant and $\mathbf{x}^{l+1}$ is $\mathrm{E}(n)$ equivariant to $\mathbf{x}^{l}$. Inductively, a composition of EGCLs will also be equivariant.

3.2. Extending EGNNs for vector type representations

In this section we propose a slight modification to the presented method such that we explicitly keep track of the particle's momentum. In some scenarios this can be useful not only to obtain an estimate of the particle's velocity at every layer but also to provide an initial velocity value in those cases where it is not 0. We can include momentum to our proposed method by just replacing Equation 4 of our model with the following equation:

$ \begin{aligned} \mathbf{v}{i}^{l+1}&= \phi{v}\left(\mathbf{h}{i}^l\right)\mathbf{v}{i}^\text{init} + C\sum_{j \neq i}\left(\mathbf{x}{i}^{l}-\mathbf{x}{j}^{l}\right) \phi_{x}\left(\mathbf{m}{ij}\right) \ \mathbf{x}{i}^{l+1} &=\mathbf{x}_{i}^{l}+ \mathbf{v}_i^{l+1} \end{aligned}\tag{7} $

Note that this extends the EGCL layer as $\mathbf{h}^{l+1}, \mathbf{x}^{l+1}, \mathbf{v}^{l+1} = \text{EGCL}[\mathbf{h}^{l}, \mathbf{x}^{l}, \mathbf{v}^{\text{init}}, \mathcal{E}]$. The only difference is that now we broke down the coordinate update (eq. 4) in two steps, first we compute the velocity $\mathbf{v}^{l+1}_i$ and then we use this velocity to update the position $\mathbf{x}^l_i$. The initial velocity $\mathbf{v}^{\text{init}}_i$ is scaled by a new function $\phi_v: \mathbb{R}^N \rightarrow \mathbb{R}^1$ that maps the node embedding $\mathbf{h}_i^l$ to a scalar value. Notice that if the initial velocity is set to zero (${\mathbf{v}}^{\text{init}}_i = 0$), both equations 4 and 7 become exactly the same. We proof the equivariance of this variant of the model in Appendix B.1. This variant is used in experiment Section 5.1 where we provide the initial velocity of the system, and predict a relative position change.

\begin{tabular}{|l | c | c | c | c | c |}

\toprule

& GNN & Radial Field & TFN & Schnet & EGNN \\

\midrule

\multirow{2}*{Edge} &

\multirow{2}*{$\mathbf{m}_{ij} = \phi_e(\mathbf{h}_i^l, \mathbf{h}_j^l, a_{ij})$} &

\multirow{2}*{$\mathbf{m}_{ij} = \phi_{\text{rf}}(\| \mathbf{r}_{ij}^{l}\|) \mathbf{r}_{ij}^{l}$} &

\multirow{2}*{$\mathbf{m}_{ij} = \sum_{k}\mathbf{W}^{lk}\mathbf{r}_{ji}^{l}\mathbf{h}_j^{lk}$} &

\multirow{2}*{$\mathbf{m}_{ij}=\phi_{\text{cf}}(\| \mathbf{r}_{ij}^{l}\|) \phi_\text{s}(\mathbf{h}_{j}^{l})$ } &

$\mathbf{m}_{ij} =\phi_{e}(\mathbf{h}_{i}^{l}, \mathbf{h}_{j}^{l}, \| \mathbf{r}_{ij}^{l}\|^{2}, a_{i j})$ \\

& & & & & $\hat{\mathbf{m}}_{ij} = \mathbf{r}_{ij}^{l}\phi_x(\mathbf{m}_{ij})$ \\

\midrule

\multirow{2}*{Agg}. &

\multirow{2}*{$\mathbf{m}_i = \sum_{j \in \mathcal{N}(i)} \mathbf{m}_{ij}$} &

\multirow{2}*{$\mathbf{m}_i = \sum_{j \neq i} \mathbf{m}_{ij}$} &

\multirow{2}*{$\mathbf{m}_i = \sum_{j \neq i} \mathbf{m}_{ij}$} &

\multirow{2}*{$\mathbf{m}_i = \sum_{j \neq i} \mathbf{m}_{ij}$} &

$\mathbf{m}_i = \sum_{j \neq i} \mathbf{m}_{ij}$ \\

& & & & &

$\hat{\mathbf{m}}_i = C\sum_{j \neq i} \hat{\mathbf{m}}_{ij}$ \\

\midrule

\multirow{2}*{Node} &

\multirow{2}*{$\mathbf{h}_i^{l+1} = \phi_h(\mathbf{h}_i^l, \mathbf{m}_i)$} &

\multirow{2}*{$\mathbf{x}_i^{l+1} = \mathbf{x}_i^{l} + \mathbf{m}_i$} &

\multirow{2}*{$\mathbf{h}_i^{l+1} = w^{ll}\mathbf{h}_i^{l} + \mathbf{m}_i$} &

\multirow{2}*{$\mathbf{h}_i^{l+1} = \phi_h(\mathbf{h}_i^l, \mathbf{m}_i)$} &

$\mathbf{h}_{i}^{l+1} =\phi_{h}\left(\mathbf{h}_{i}^l, \mathbf{m}_{i}\right)$ \\

&

&

&

&

&

$\mathbf{x}_i^{l+1} = \mathbf{x}_i^{l} + \hat{\mathbf{m}}_i$ \\

\midrule

&

Non-equivariant &

$\mathrm{E}(n)$-Equivariant &

$\mathrm{SE}(3)$-Equivariant &

$\mathrm{E}(n)$-Invariant &

$\mathrm{E}(n)$-Equivariant \\

\bottomrule

\end{tabular}

3.3. Inferring the edges

Given a point cloud or a set of nodes, we may not always be provided with an adjacency matrix. In those cases we can assume a fully connected graph where all nodes exchange messages with each other $j \neq i$ as done in Equation 5. This fully connected approach may not scale well to large point clouds where we may want to locally limit the exchange of messages $\mathbf{m}i = \sum{j \in \mathcal{N}(i)}\mathbf{m}_{ij}$ to a neighborhood $\mathcal{N}(i)$ to avoid an overflow of information.

Similarly to [14, 6], we present a simple solution to infer the relations/edges of the graph in our model, even when they are not explicitly provided. Given a set of neighbors $\mathcal{N}(i)$ for each node $i$, we can re-write the aggregation operation from our model (eq. 5) in the following way:

$ \mathbf{m}i = \sum{j \in \mathcal{N}(i)}\mathbf{m}{ij} = \sum{j \neq i} e_{ij}\mathbf{m}_{ij}\tag{8} $

Where $e_{ij}$ takes value $1$ if there is an edge between nodes $(i, j)$ and $0$ otherwise. Now we can choose to approximate the relations $e_{ij}$ with the following function $e_{ij} \approx \phi_{inf}(\mathbf{m}{ij})$, where $\phi{inf}: \mathbb{R}^{nf} \rightarrow [0, 1]^1$ resembles a linear layer followed by a sigmoid function that takes as input the current edge embedding and outputs a soft estimation of its edge value. This modification does not change the $\mathrm{E}(n)$ properties of the model since we are only operating on the messages $\mathbf{m}_{ij}$ which are already $\mathrm{E}(n)$ invariant.

4. Related Work

Section Summary: This section reviews existing research on group equivariant neural networks, which are designed to handle transformations like rotations and translations in tasks such as 3D modeling, highlighting approaches using spherical harmonics for efficiency in specific cases but noting their high computational cost and limitations to three dimensions. It also covers message passing techniques for molecular data, starting from basic permutation-equivariant graph neural networks and advancing to ones that incorporate distances, angles, and directional info for full rotation and translation invariance, often relying on complex functions like Bessel or spherical harmonics. The authors' EGNN method stands out by achieving broad equivariance in any dimension through simple updates to both node features and coordinates, avoiding expensive computations while matching the flexibility of standard graph networks.

Group equivariant neural networks have demonstrated their effectiveness in a wide variety of tasks ([15, 16, 17, 18, 19]). Recently, various forms and methods to achieve E(3) or SE(3) equivariance have been proposed. [7, 8] utilize the spherical harmonics to compute a basis for the transformations, which allows transformations between higher-order representations. A downside to this method is that the spherical harmonics need to be recomputed which can be expensive. Currently, an extension of this method to arbitrary dimensions is unknown. [9] parametrize transformations by mapping kernels on the Lie Algebra. For this method the neural network outputs are in certain situations stochastic, which may be undesirable. [20] proposes a set of isometric invariant and equivairant transformations for Graph Neural Networks. [12, 10] propose an $\mathrm{E}(n)$ equivariant network to model 3D point clouds, but the method is only defined for positional data on the nodes without any feature dimensions.

Another related line of research concerns message passing algorithms on molecular data. [11] presented a message passing setting (or Graph Neural Network) for quantum chemistry, this method is permutation equivariant but not translation or rotation equivariant. [21] extends the equivariance of GNNs such that each neuron transforms in a specific way under permutations, but this extension only affects its permutation group and not translations or rotations in a geometric space. Further works [13, 22] build $\mathrm{E}(n)$ invariant message passing networks by inputting the relative distances between points. [23, 24] in addition to relative distances it includes a modified message passing scheme analogous to Belief Propagation that considers angles and directional information equivariant to rotations. It also uses Bessel functions and spherical harmonics to construct an orthogonal basis. [25, 26] include $SO(3)$ equivariance in its intermediate layers for modelling the behavior and properties of molecular data. Our method is also framed as a message passing framework but in contrast to these methods it achieves $\mathrm{E}(n)$ equivariance.

Relationship with existing methods

In Table 1 the EGNN equations are detailed together with some of its closest methods from the literature under the message passing notation from [11]. This table aims to provide a simple way to compare these different algorithms. It is structured in three main rows that describe i) the edge ii) aggregation and iii) node update operations. The GNN algorithm is the same as the previously introduced in Section 2.1. Our EGNN algorithm is also equivalent to the description in Section 3 but notation has been modified to match the (edge, aggregation, node) format. In all equations $\mathbf{r}_{ij}^l = (\mathbf{x}i - \mathbf{x}j)^l$. Notice that except the EGNN, all algorithms have the same aggregation operation and the main differences arise from the edge operation. The algorithm that we call "Radial Field" is the $\mathrm{E}(n)$ equivariant update from [12]. This method is $\mathrm{E}(n)$ equivariant, however its main limitation is that it only operates on $\mathbf{x}$ and it doesn't propagate node features $\mathbf{h}$ among nodes. In the method $\phi{rf}$ is modelled as an MLP. Tensor Field Networks (TFN) [7] instead propagate the node embeddings $\mathbf{h}$ but it uses spherical harmonics to compute its learnable weight kernel $\mathbf{W}^{\ell k}: \mathbb{R}^{3} \rightarrow \mathbb{R}^{(2 \ell+1) \times(2 k+1)}$ which preserves $SE(3)$ equivariance but is expensive to compute and limited to the 3 dimensional space. The SE(3) Transformer [8] (not included in this table), can be interpreted as an extension of TFN with attention. Schnet [13] can be interpreted as an $\mathrm{E}(n)$ invariant Graph Neural Network where $\phi{cf}$ receives as input relative distances and outputs a continuous filter convolution that multiplies the neighbor embeddings $\mathbf{h}$. Our EGNN differs from these other methods in terms that it performs two different updates in each of the table rows, one related to the embeddings $\mathbf{h}$ and another related to the coordinates $\mathbf{x}$, these two variables exchange information in the edge operation. In summary the EGNN can retain the flexibility of GNNs while remaining $\mathrm{E}(n)$ equivariant as the Radial Field algorithm and without the need to compute expensive operations (i.e. spherical harmonics).

5. Experiments

Section Summary: In the Experiments section, researchers test their Equivariant Graph Neural Network (EGNN) on two tasks: predicting the future positions of five charged particles interacting in three-dimensional space over 1,000 time steps, and building an equivariant graph autoencoder for unsupervised learning of graph representations. For the particle simulation, the EGNN outperforms other models like graph neural networks and equivariant alternatives, achieving the lowest error rate while remaining computationally efficient, and it proves more data-efficient across varying training sizes. The graph autoencoder experiment demonstrates that incorporating equivariance improves performance over standard neural networks on graph datasets.

5.1. Modelling a dynamical system — N-body system

In a dynamical system a function defines the time dependence of a point or set of points in a geometrical space. Modelling these complex dynamics is crucial in a variety of applications such as control systems [27], model based dynamics in reinforcement learning [28], and physical systems simulations [29, 30]. In this experiment we forecast the positions for a set of particles which are modelled by simple interaction rules, yet can exhibit complex dynamics.

Similarly to [8], we extended the Charged Particles N-body experiment from [6] to a 3 dimensional space. The system consists of 5 particles that carry a positive or negative charge and have a position and a velocity associated in 3-dimensional space. The system is controlled by physics rules: particles are attracted or repelled depending on their charges. This is an equivariant task since rotations and translations on the input set of particles result in the same transformations throughout the entire trajectory.

Dataset: We sampled 3.000 trajectories for training, 2.000 for validation and 2.000 for testing. Each trajectory has a duration of 1.000 timesteps. For each trajectory we are provided with the initial particle positions $\mathbf{p}^{(0)}={ \mathbf{p}^{(0)}_1, \dots \mathbf{p}^{(0)}_5 } \in \mathbb{R}^{5 \times 3}$, their initial velocities $\mathbf{v}^{(0)}={ \mathbf{v}^{(0)}_1, \dots \mathbf{v}^{(0)}_5 } \in \mathbb{R}^{5 \times 3}$ and their respective charges $\mathbf{c} = {c_1, \dots c_5} \in {-1, 1}^5$. The task is to estimate the positions of the five particles after 1.000 timesteps. We optimize the averaged Mean Squared Error of the estimated position with the ground truth one.

Implementation details: In this experiment we used the extension of our model that includes velocity from Section 3.2. We input the position $\mathbf{p}^{(0)}$ as the first layer coordinates $\mathbf{x}^0$ of our model and the velocity $\mathbf{v}^{(0)}$ as the initial velocity in Equation 4, the norms $| \mathbf{v}_i^{(0)} |$ are also provided as features to $\mathbf{h}i^0$ through a linear mapping. The charges are input as edge attributes $a{ij} = c_i c_j$. The model outputs the last layer coordinates $\mathbf{x}^L$ as the estimated positions. We compare our method to its non equivariant Graph Neural Network (GNN) cousin, and the equivariant methods: Radial Field [12], Tensor Field Networks and the SE(3) Transformer. All algorithms are composed of 4 layers and have been trained under the same conditions, batch size 100, 10.000 epochs, Adam optimizer, the learning rate was tuned independently for each model. We used 64 features for the hidden layers in the Radial Field, the GNN and our EGNN. As non-linearity we used the Swish activation function [31]. For TFN and the SE(3) Transformer we swept over different number of vector types and features and chose those that provided the best performance. Further implementation details are provided in Appendix C.1. A Linear model that simply considers the motion equation $\mathbf{p}^{(t)} = \mathbf{p}^{(0)} + \mathbf{v}^{(0)}t$ is also included as a baseline. We also provide the average forward pass time in seconds for each of the models for a batch of 100 samples in a GTX 1080 Ti GPU.

:Table 2: Mean Squared Error for the future position estimation in the N-body system experiment, and forward time in seconds for a batch size of 100 samples running in a GTX 1080Ti GPU.

| Method | MSE | Forward time (s) |

|---|---|---|

| Linear | 0.0819 | .0001 |

| SE(3) Transformer | 0.0244 | .1346 |

| Tensor Field Network | 0.0155 | .0343 |

| Graph Neural Network | 0.0107 | .0032 |

| Radial Field | 0.0104 | .0039 |

| EGNN | 0.0071 | .0062 |

Results As shown in Table 2 our model significantly outperforms the other equivariant and non-equivariant alternatives while still being efficient in terms of running time. It reduces the error with respect to the second best performing method by a $32%$. In addition it doesn't require the computation of spherical harmonics which makes it more time efficient than Tensor Field Networks and the SE(3) Transformer.

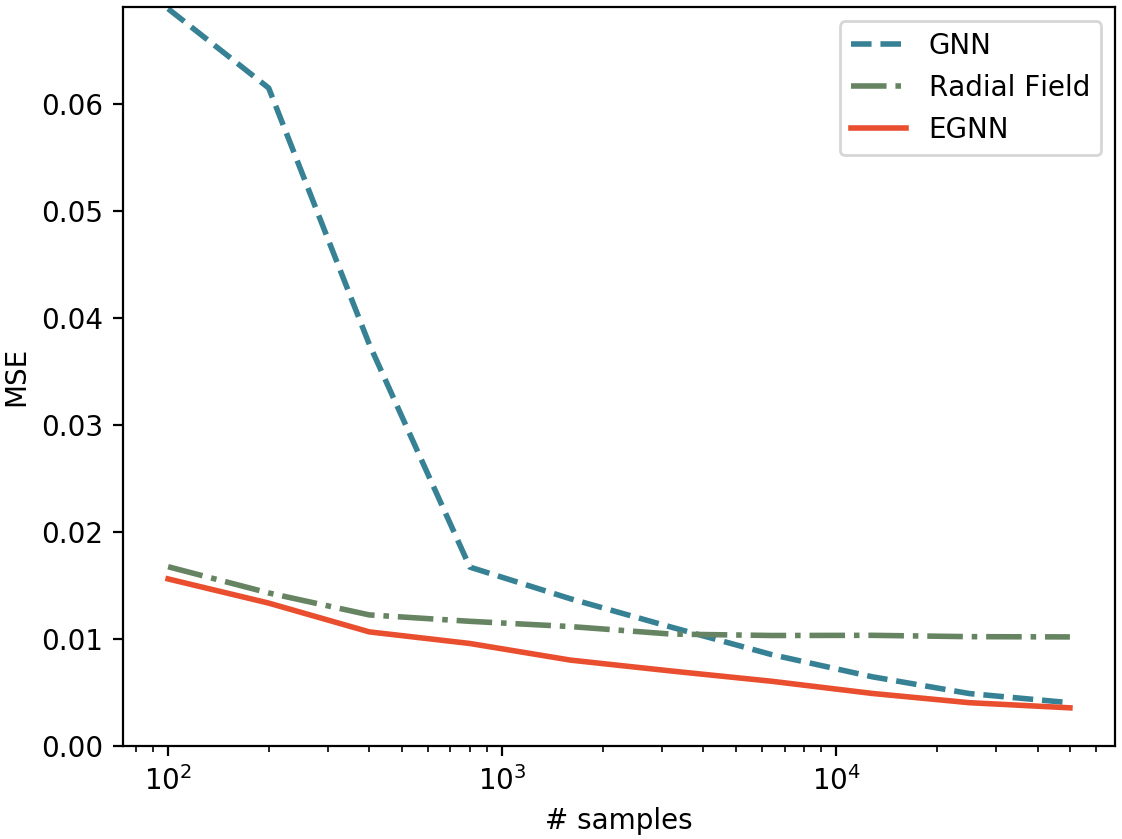

Analysis for different number of training samples: We want to analyze the performance of our EGNN in the small and large data regime. In the following, we report on a similar experiment as above, but instead of using 3.000 training samples we generated a new training partition of 50.000 samples and we sweep over different amounts of data from 100 to 50.000 samples. We compare the performances of our EGNN vs its non-equivariant GNN counterpart and the Radial Field algorithm. Results are presented in Figure 2. Our method outperforms both Radial Field and GNNs in the small and large data regimes. This shows the EGNN is more data efficient than GNNs since it doesn't require to generalize over rotations and translations of the data while it ensembles the flexibility of GNNs in the larger data regime. Due to its high model bias, the Radial Field algorithm performs well when data is scarce but it is unable to learn the subtleties of the dataset as we increase the training size. In summary, our EGNN benefits from both the high bias of $\mathrm{E}(n)$ methods and the flexibility of GNNs.

5.2. Graph Autoencoder

A Graph Autoencoder can learn unsupervised representations of graphs in a continuous latent space [32, 33]. In this experiment section we use our EGNN to build an Equivariant Graph Autoencoder. We will explain how Graph Autoencoders can benefit from equivariance and we will show how our method outperforms standard GNN autoencoders in the provided datasets. This problem is particularly interesting since the embedding space can be scaled to larger dimensions and is not limited to a 3 dimensional Euclidean space.

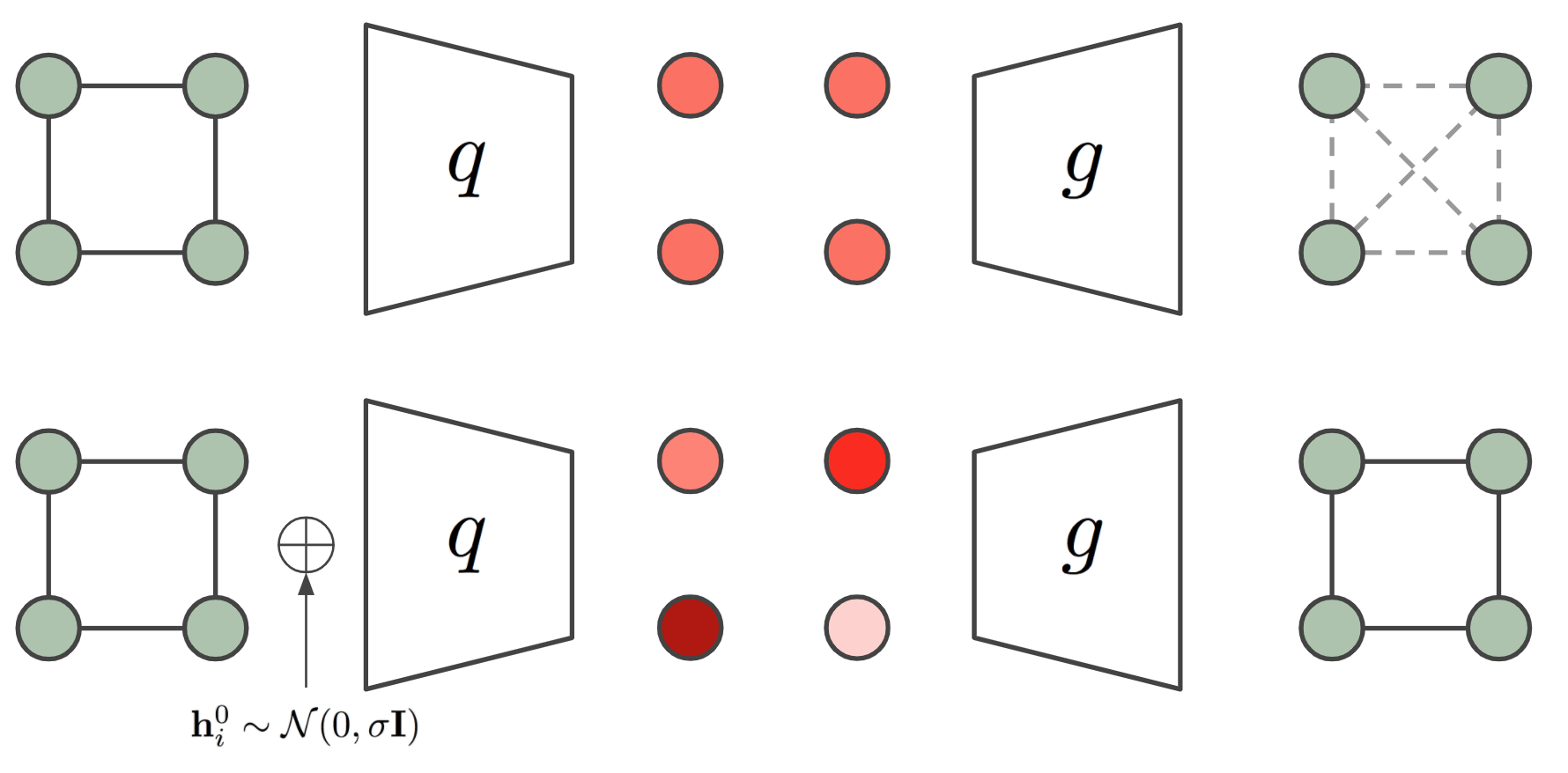

Similarly to the work of [32] further extended by section 3.3 in [34], our graph auto-encoder $\mathbf{z} = q(\mathcal{G})$ embeds a graph $\mathcal{G}$ into a set of latent nodes $\mathbf{z} = {\mathbf{z}_1, \dots \mathbf{z}_M } \in \mathbb{R}^{M \times n}$, where $M$ is the number of nodes and $n$ the embedding size per node. Notice this may reduce the memory complexity to store the graphs from $O(M^2)$ to $O(Mn)$ where $n$ may depend on $M$ for a certain approximation error tolerance. This differs from the variational autoencoder proposed in [33] which embeds the graph in a single vector $\mathbf{z} \in \mathbb{R}^K$, which causes the reconstruction to be computationally very expensive since the nodes of the decoded graph have to be matched again to the ground truth. In addition to the introduced graph generation and representation learning methods, it is worth it mentioning that in the context of graph compression other methods [35] can be used.

More specifically, we will compare our Equivariant Graph Auto-Encoder in the task presented in [34] where a graph $\mathcal{G} = (\mathcal{V}, \mathcal{E})$ with node features $H \in \mathbb{R}^{M \times \text{nf}}$ and adjacency matrix $A \in {0, 1}^{M \times M}$ is embedded into a latent space $\mathbf{z} = q(H, A) \in \mathbb{R}^{M \times n}$. Following [32, 34], we are only interested in reconstructing the adjacency matrix $A$ since the datasets we will work with do not contain node features. The decoder $g(\cdot)$ proposed by [34] takes as input the embedding space $\mathbf{z}$ and outputs the reconstructed adjacency matrix $\hat{A} = g(\mathbf{z})$, this decoder function is defined as follows:

$ \hat{A}_{ij} = g_e(\mathbf{z}i, \mathbf{z}j)=\frac{1}{1 + \mathrm{exp}(w\left| \mathbf{z}{i}- \mathbf{z}{j}\right|^{2} + b)}\tag{9} $

Where $w$ and $b$ are its only learnable parameters and $g_e(\cdot)$ is the decoder edge function applied to every pair of node embeddings. It reflects that edge probabilities will depend on the relative distances among node embeddings. The training loss is defined as the binary cross entropy between the estimated and the ground truth edges $\mathcal{L} = \sum_{ij}\mathrm{BCE}(\hat{A}{ij}, A{ij})$.

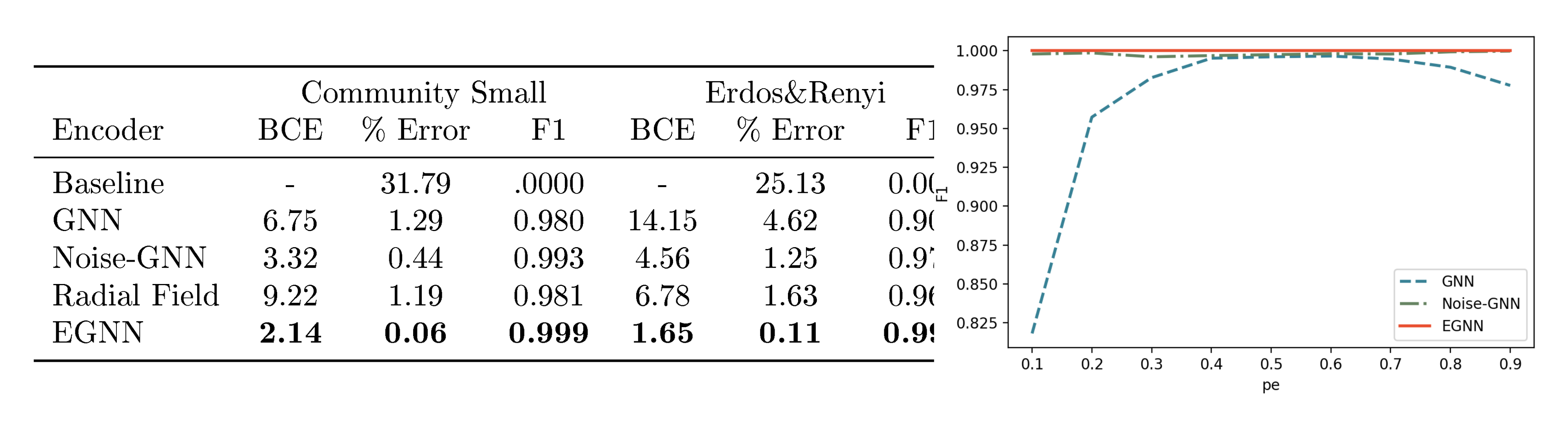

The symmetry problem: The above stated autoencoder may seem straightforward to implement at first sight but in some cases there is a strong limitation regarding the symmetry of the graph. Graph Neural Networks are convolutions on the edges and nodes of a graph, i.e. the same function is applied to all edges and to all nodes. In some graphs (e.g. those defined only by its adjacency matrix) we may not have input features in the nodes, and for that reason the difference among nodes relies only on their edges or neighborhood topology. Therefore, if the neighborhood of two nodes is exactly the same, their encoded embeddings will be the same too. A clear example of this is a cycle graph (an example of a 4 nodes cycle graph is provided in Figure 3). When running a Graph Neural Network encoder on a node featureless cycle graph, we will obtain the exact same embedding for each of the nodes, which makes it impossible to reconstruct the edges of the original graph from the node embeddings. The cycle graph is a severe example where all nodes have the exact same neighborhood topology but these symmetries can be present in different ways for other graphs with different edge distributions or even when including node features if these are not unique.

An ad-hoc method to break the symmetry of the graph is introduced by [34]. This method introduces noise sampled from a Gaussian distribution into the input node features of the graph $\mathbf{h}_i^{0} \sim \mathcal{N}(\mathbf{0}, \sigma\mathbf{I})$. This noise allows different representations for all node embeddings and as a result the graph can be decoded again, but it comes with a drawback, the network has to generalize over the new introduced noise distribution. Our Equivariant Graph Autoencoder will remain translation and rotation equivariant to this sampled noise which we find makes the generalization much easier. Another way of looking at this is considering the sampled noise makes the node representations go from structural to positional [36] where $\mathrm{E}(n)$ equivariance may be beneficial. In our case we will simply input this noise as the input coordinates $\mathbf{x}^{0} \sim \mathcal{N}(\mathbf{0}, \sigma\mathbf{I}) \in \mathbb{R}^{M \times n}$ of our EGNN which will output an equivariant transformation of them $\mathbf{x}^L$, this output will be used as the embedding of the graph (i.e. $\mathbf{z} = \mathbf{x}^L$) which is the input to the decoder from Equation 9.

Dataset: We generated community-small graphs [37, 34] by running the original code from [37]. These graphs contain $12\leq M \leq20$ nodes. We also generated a second dataset using the Erdos&Renyi generative model [38] sampling random graphs with an initial number of $7\leq M \leq16$ nodes and edge probability $p_e=0.25$. We sampled $5.000$ graphs for training, $500$ for validation and $500$ for test for both datasets. Each graph is defined as and adjacency matrix $A \in {0, 1}^{M \times M}$.

Implementation details: Our Equivariant Graph Auto-Encoder is composed of an EGNN encoder followed by the decoder from Equation 9. The graph edges $A_{ij}$ are input as edge attributes $a_{ij}$ in Equation 3. The noise used to break the symmetry is input as the coordinates $\mathbf{x}^0 \sim \mathcal{N(\mathbf{0}, \sigma\mathbf{I})} \in \mathbb{R}^{M \times n}$ in the first layer and $\mathbf{h}^0$ is initialized as ones since we are working with featureless graphs. As mentioned before, the encoder outputs an equivariant transformation on the coordinates which is the graph embedding and input to the decoder $\mathbf{z} = \mathbf{x}^L \in \mathbb{R}^{M\times n}$. We use $n=8$ dimensions for the embedding space. We compare the EGNN to its GNN cousin, we also compare to Noise-GNN which is an adaptation of our GNN to match the setting from [34], and we also include the Radial Field algorithm as an additional baseline. All four models have 4 layers, 64 features for the hidden layers, the Swish activation function as a non-linearity and they were all trained for 100 epochs using the Adam optimizer and learning rate $10^{-4}$. More details are provided in Appendix C.2. Since the number of nodes is larger than the number of layers, the receptive field of a GNN may not comprise the whole graph which can make the comparison unfair with our EGNN. To avoid this limitation, all models exchange messages among all nodes and the edge information is provided as edge attributes $a_{ij} = A_{ij}$ in all of them.

Results: In the table from Figure 5 we report the Binary Cross Entropy loss between the estimated and ground truth edges, the % Error which is defined as the percentage of wrong predicted edges with respect to the total amount of potential edges, and the F1 score of the edge classification, all numbers refer to the test partition. We also include a "Baseline" that predicts all edges as missing $\hat{A}_{ij}=0$. The standard GNN seems to suffer from the symmetry problem and provides the worst performance. When introducing noise (Noise-GNN), both the loss and the error decrease showing that it is actually useful to add noise to the input nodes. Finally, our EGNN remains $\mathrm{E}(n)$ equivariant to this noise distribution and provides the best reconstruction with a $0.11%$ error in the Erdos&Renyi dataset and close to optimal $0.06%$ in the Community Small dataset. A further analysis of the reconstruction error for different $n$ embedding sizes is reported in Appendix D.1.

\begin{tabular}{l c c c c c c c c c c c c}

\toprule

Task & $\alpha$ & $\Delta \varepsilon$ & $\varepsilon_{\mathrm{HOMO}}$ & $\varepsilon_{\mathrm{LUMO}}$ & $\mu$ & $C_{\nu}$ & $G$ & $H$ & $R^2$ & $U$ & $U_0$ & ZPVE \\

Units & bohr$^3$ & meV & meV & meV & D & cal/mol K & meV & meV & bohr$^3$ & meV & meV & meV \\

\midrule

NMP & .092 & 69 & 43 & 38 & .030 & .040 & 19 & 17 & .180 & 20 & 20 & 1.50 \\

Schnet & .235 & 63 & 41 & 34 & .033 & .033 & 14 & 14 & .073 & 19 & 14 & 1.70 \\

Cormorant & .085 & 61 & 34 & 38 & .038 & .026 & 20 & 21 & .961 & 21 & 22 & 2.03 \\

L1Net & .088 & 68 & 46 & 35 & .043 & .031 & 14 & 14 & .354 & 14 & 13 & 1.56\\

LieConv & .084 & 49 & 30 & 25 & .032 & .038 & 22 & 24 & .800 & 19 & 19 & 2.28 \\

DimeNet++* & .044 & 33 & 25 & 20 & .030 & .023 & 8 & 7 & .331 & 6 & 6 & 1.21 \\

TFN & .223 & 58 & 40 & 38 & .064 & .101 & - & - & - & - & - & - \\

SE(3)-Tr. & .142 & 53 & 35 & 33 & .051 & .054 & - & - & - & - & - & - \\

\midrule

EGNN & .071 & 48 & 29 & 25 & .029 & .031 & 12 & 12 & .106 & 12 & 11 & 1.55 \\

\bottomrule

\end{tabular}

Overfitting the training set: We explained the symmetry problem and we showed the EGNN outperforms other methods in the given datasets. Although we observed that adding noise to the GNN improves the results, it is difficult to exactly measure the impact of the symmetry limitation in these results independent from other factors such as generalization from the training to the test set. In this section we conduct an experiment where we train the different models in a subset of 100 Erdos&Renyi graphs and embedding size $n=16$ with the aim to overfit the data. We evaluate the methods on the training data. In this experiment the GNN is unable to fit the training data properly while the EGNN can achieve perfect reconstruction and Noise-GNN close to perfect. We sweep over different $p_e$ sparsity values from $0.1$ to $0.9$ since the symmetry limitation is more present in very sparse or very dense graphs. We report the F1 scores of this experiment in the right plot of Figure 5.

In this experiment we showed that $\mathrm{E}(n)$ equivariance improves performance when embedding graphs in a continuous space as a set of nodes in dimension $n$. Even though this is a simple reconstruction task, we think this can be a useful step towards generating graphs or molecules where often graphs (i.e. edges) are decoded as pairwise distances or similarities between nodes e.g. [32, 34, 39], and these metrics (e.g. eq. 9) are $\mathrm{E}(n)$ invariant. Additionally this experiment also showed that our method can successfully perform in a $\mathrm{E}(n)$ equivariant task for higher dimensional spaces where $n>3$.

5.3. Molecular data — QM9

The QM9 dataset [5] has become a standard in machine learning as a chemical property prediction task. The QM9 dataset consists of small molecules represented as a set of atoms (up to 29 atoms per molecule), each atom having a 3D position associated and a five dimensional one-hot node embedding that describe the atom type (H, C, N, O, F). The dataset labels are a variety of chemical properties for each of the molecules which are estimated through regression. These properties are invariant to translations, rotations and reflections on the atom positions. Therefore those models that are E(3) invariant are highly suitable for this task.

We imported the dataset partitions from [25], 100K molecules for training, 18K for validation and 13K for testing. A variety of 12 chemical properties were estimated per molecule. We optimized and report the Mean Absolute Error between predictions and ground truth.

Implementation details: Our EGNN receives as input the 3D coordinate locations of each atom which are provided as $\mathbf{x}_i^0$ in Equation 3 and an embedding of the atom properties which is provided as input node features $\mathbf{h}_i^0$. Since this is an invariant task and also $\mathbf{x}^0$ positions are static, there is no need to update the particle's position $\mathbf{x}$ by running Equation 4 as we did in previous experiments. Consequently, we tried both manners and we didn't notice any improvement by updating $\mathbf{x}$. When not updating the particle's position (i.e. skipping Equation 4), our model becomes $\mathrm{E}(n)$ invariant, which is analogous to a standard GNN where all relative squared norms between pairs of points $| \mathbf{x}_i - \mathbf{x}_j |^2$ are inputted to the edge operation (eq. 3). Additionally, since we are not provided with an adjacency matrix and molecules can scale up to 29 nodes, we use the extension of our model from Section 3.3 that infers a soft estimation of the edges. Our EGNN network consists of 7 layers, 128 features per hidden layer and the Swish activation function as a non-linearity. A sum-pooling operation preceded and followed by two layers MLPs maps all the node embeddings $\mathbf{h}^{L}$ from the output of the EGNN to the estimated property value. Further implementation details are reported in Appendix. Appendix C. We compare to NMP [11], Schnet [13], Cormorant [25], L1Net [26], LieConv [9], DimeNet++ [24], TFN [7] and SE(3)-Tr. [8].

Results are presented in Table 3. Our method reports very competitive results in all property prediction tasks while remaining relatively simple, i.e. not introducing higher order representations, angles or spherical harmonics. Perhaps, surprisingly, we outperform other equivariant networks that consider higher order representations while in this task, we are only using type-0 representations (i.e. relative distances) to define the geometry of the molecules. In Appendix E we prove that when only positional information is given (i.e. no velocity or higher order type features), then the geometry is completely defined by the norms in-between points up to $\mathrm{E}(n)$-transformations, in other words, if two collections of points separated by $\mathrm{E}(n)$ transformations are considered to be identical, then the relative norms between points is a unique identifier of the collection.

6. Conclusions

Section Summary: Equivariant graph neural networks are gaining popularity in the natural and medical sciences because they offer a powerful way to study molecules and their characteristics. This research introduces a new, efficient, and user-friendly deep learning model for graphs that outperforms existing top methods across many tasks. The authors believe it could significantly advance fields like drug development, protein structure prediction, material design, and 3D computer vision.

Equivariant graph neural networks are receiving increasing interest from the natural and medical sciences as they represent a new tool for analyzing molecules and their properties. In this work, we presented a new $\mathrm{E}(n)$ equivariant deep architecture for graphs that is computationally efficient, easy to implement, and significantly improves over the current state-of-the-art on a wide range of tasks. We believe these properties make it ideally suited to make a direct impact on topics such as drug discovery, protein folding and the design of new materials, as well as applications in 3D computer vision.

We would like to thank Patrick Forré for his support to formalize the invariance features identification proof.

A. Equivariance Proof

Section Summary: This section proves that the model's output remains consistent when the input coordinates are shifted, rotated, or reflected, ensuring the system behaves predictably under these spatial changes. It starts by assuming initial features ignore absolute positions or orientations, then shows that distances between points stay the same regardless of such transformations, making intermediate calculations stable. Through a step-by-step derivation, it demonstrates that updated coordinates transform in the same way as the input, while the features remain unchanged, confirming the model's overall equivariance.

In this section we prove that our model is translation equivariant on $\mathbf{x}$ for any translation vector $g \in \mathbb{R}^n$ and it is rotation and reflection equivariant on $\mathbf{x}$ for any orthogonal matrix $Q \in \mathbb{R}^{n \times n}$. More formally, we will prove the model satisfies:

$ Q \mathbf{x}^{l+1} + g, \mathbf{h}^{l+1} = \mathrm{EGCL}(Q \mathbf{x}^l + g, \mathbf{h}^l) $

We will analyze how a translation and rotation of the input coordinates propagates through our model. We start assuming $\mathbf{h}^0$ is invariant to $\mathrm{E}(n)$ transformations on $\mathbf{x}$, in other words, we do not encode any information about the absolute position or orientation of $\mathbf{x}^0$ into $\mathbf{h}^0$. Then, the output $\mathbf{m}{ij}$ of Equation 3 will be invariant too since the distance between two particles is invariant to translations $| \mathbf{x}{i}^{l} +g - [\mathbf{x}{j}^{l} + g]|^{2} = | \mathbf{x}{i}^{l} - \mathbf{x}{j}^{l}|^{2}$, and it is invariant to rotations and reflections $|Q \mathbf{x}{i}^{l} - Q \mathbf{x}{j}^{l}|^{2} = (\mathbf{x}i^l- \mathbf{x}j^l)^\top Q^\top Q (\mathbf{x}{i}^{l} - \mathbf{x}{j}^{l}) = (\mathbf{x}i^l- \mathbf{x}j^l)^\top \mathbf{I} (\mathbf{x}{i}^{l} - \mathbf{x}{j}^{l}) = | \mathbf{x}{i}^{l} - \mathbf{x}_{j}^{l}|^{2}$ such that the edge operation becomes invariant:

$ \mathbf{m}{i, j} =\phi{e}\left(\mathbf{h}{i}^{l}, \mathbf{h}{j}^{l}, \left|Q \mathbf{x}{i}^{l} + g- [Q \mathbf{x}{j}^{l} + g]\right|^{2}, a_{i j}\right) = \phi_{e}\left(\mathbf{h}{i}^{l}, \mathbf{h}{j}^{l}, \left| \mathbf{x}{i}^{l} - \mathbf{x}{j}^{l} \right|^{2}, a_{i j}\right) $

The second equation of our model (eq. 4) that updates the coordinates $\mathbf{x}$ is $\mathrm{E}(n)$ equivariant. Following, we prove its equivariance by showing that an $\mathrm{E}(n)$ transformation of the input leads to the same transformation of the output. Notice $\mathbf{m}_{ij}$ is already invariant as proven above. We want to show:

$ Q \mathbf{x}{i}^{l+1} + g = Q \mathbf{x}{i}^{l} + g +C\sum_{j \neq i}\left(Q \mathbf{x}{i}^{l} + g - [Q \mathbf{x}{j}^{l} + g]\right) \phi_{x}\left(\mathbf{m}_{i, j}\right) $

Derivation.

$ \begin{align*} Q \mathbf{x}{i}^{l} + g +C\sum{j \neq i}\left(Q \mathbf{x}{i}^{l} + g - Q \mathbf{x}{j}^{l} - g\right) \phi_{x}\left(\mathbf{m}{i, j}\right) &= Q \mathbf{x}{i}^{l} + g +QC\sum_{j \neq i}\left(\mathbf{x}{i}^{l} - \mathbf{x}{j}^{l}\right) \phi_{x}\left(\mathbf{m}{i, j}\right)\ &= Q\left(\mathbf{x}{i}^{l} +C\sum_{j \neq i}\left(\mathbf{x}{i}^{l} - \mathbf{x}{j}^{l}\right) \phi_{x}\left(\mathbf{m}{i, j}\right) \right) + g\ &= Q \mathbf{x}{i}^{l+1} + g \end{align*} $

Therefore, we have proven that rotating and translating $\mathbf{x}^l$ results in the same rotation and translation on $\mathbf{x}^{l+1}$ at the output of Equation 4.

Furthermore equations 5 and 6 only depend on $\mathbf{m}_{ij}$ and $\mathbf{h}^l$ which as saw at the beginning of this proof, are $\mathrm{E}(n)$ invariant, therefore the output of Equation 6 $\mathbf{h}^{l+1}$ will be invariant too. Thus concluding that a transformation $Q \mathbf{x}^l +g$ on $\mathbf{x}^l$ will result in the same transformation on $\mathbf{x}^{l+1}$ while $\mathbf{h}^{l+1}$ will remain invariant to it such that $Q \mathbf{x}^{l+1} + g, \mathbf{h}^{l+1} = \mathrm{EGCL}(Q \mathbf{x}^l + g, \mathbf{h}^l)$ is satisfied.

B. Re-formulation for velocity type inputs

Section Summary: This section reformulates an equivariant graph neural network (EGNN) layer to handle velocity inputs, allowing it to process initial velocities alongside particle positions and hidden features to produce updated positions, velocities, and features while maintaining symmetry under rotations and translations. The update process involves computing messages between particles based on their features and distances, then adjusting velocities using these messages and position differences before shifting positions by the new velocities. A proof demonstrates that this velocity-based approach preserves the model's equivariance property, ensuring consistent outputs when the input system is rotated or translated as a whole.

In this section we write down the EGNN transformation layer $\mathbf{h}^{l+1}, \mathbf{x}^{l+1}, \mathbf{v}^{l+1} = \text{EGCL}[\mathbf{h}^{l}, \mathbf{x}^{l}, \mathbf{v}^{\text{init}}, \mathcal{E}]$ that can take in velocity input and output channels. We also prove it remains $\mathrm{E}(n)$ equivariant.

$ \begin{align*} \mathbf{m}{i j} &=\phi{e}\left(\mathbf{h}{i}^{l}, \mathbf{h}{j}^{l}, \left| \mathbf{x}{i}^{l}- \mathbf{x}{j}^{l}\right|^{2}, a_{i j}\right) \ \mathbf{v}{i}^{l+1}&= \phi{v}\left(\mathbf{h}{i}^l\right)\mathbf{v}{i}^{\text{init}} +C\sum_{j \neq i}\left(\mathbf{x}{i}^{l}- \mathbf{x}{j}^{l}\right) \phi_{x}\left(\mathbf{m}{i j}\right) \ \mathbf{x}{i}^{l+1} &=\mathbf{x}{i}^{l}+ \mathbf{v}i^{l+1} \ \mathbf{m}{i} &=\sum{j \neq i} \mathbf{m}{i j} \ \mathbf{h}{i}^{l+1} &=\phi_{h}\left(\mathbf{h}{i}^l, \mathbf{m}{i}\right) \end{align*} $

B.1. Equivariance proof for velocity type inputs

In this subsection we prove that the velocity types input formulation of our model is also $\mathrm{E}(n)$ equivariant on $\mathbf{x}$. More formally, for any translation vector $g \in \mathbb{R}^n$ and for any orthogonal matrix $Q \in \mathbb{R}^{n \times n}$, the model should satisfy:

$ \mathbf{h}^{l+1}, Q \mathbf{x}^{l+1} + g, Q \mathbf{v}^{l+1} = \text{EGCL}[\mathbf{h}^{l}, Q \mathbf{x}^{l} + g, Q \mathbf{v}^{\text{init}}, \mathcal{E}] $

In Appendix A we already proved the equivariance of our EGNN (Section 3) when not including vector type inputs. In its velocity type inputs variant we only replaced its coordinate updates (eq. 4) by Equation 7 that includes velocity. Since this is the only modification we will only prove that Equation 7 re-written below is equivariant.

$ \begin{align*} \mathbf{v}{i}^{l+1}&= \phi{v}\left(\mathbf{h}{i}^l\right)\mathbf{v}{i}^{\text{init}} +C\sum_{j \neq i}\left(\mathbf{x}{i}^{l}- \mathbf{x}{j}^{l}\right) \phi_{x}\left(\mathbf{m}{i j}\right) \ \mathbf{x}{i}^{l+1} &=\mathbf{x}_{i}^{l}+ \mathbf{v}_i^{l+1} \ \end{align*} $

First, we prove the first line preserves equivariance, that is we want to show:

$ Q \mathbf{v}{i}^{l+1} = \phi{v}\left(\mathbf{h}{i}^l\right)Q \mathbf{v}{i}^{\text{init}} +C\sum_{j \neq i}\left(Q \mathbf{x}{i}^{l} + g - [Q \mathbf{x}{j}^{l} + g]\right) \phi_{x}\left(\mathbf{m}_{i j}\right) $

Derivation.

$ \begin{align} \phi_{v}\left(\mathbf{h}{i}^l\right)Q \mathbf{v}{i}^{\text{init}} +C\sum_{j \neq i}\left(Q \mathbf{x}{i}^{l} + g - [Q \mathbf{x}{j}^{l} + g]\right) \phi_{x}\left(\mathbf{m}{i j}\right) &= Q\phi{v}\left(\mathbf{h}{i}^l\right)\mathbf{v}{i}^{\text{init}} +QC\sum_{j \neq i}\left(\mathbf{x}{i}^{l} - \mathbf{x}{j}^{l} \right) \phi_{x}\left(\mathbf{m}{i j}\right) \ &= Q\left(\phi{v}\left(\mathbf{h}{i}^l\right)\mathbf{v}{i}^{\text{init}} +C\sum_{j \neq i}\left(\mathbf{x}{i}^{l} - \mathbf{x}{j}^{l} \right) \phi_{x}\left(\mathbf{m}{i j}\right)\right) \ &= Q \mathbf{v}{i}^{l+1} \end{align} $

Finally, it is straightforward to show the second equation is also equivariant, that is we want to show $Q \mathbf{x}{i}^{l+1} + g = Q\mathbf{x}{i}^{l}+ g + Q\mathbf{v}_i^{l+1}$

Derivation.

$ \begin{align*} Q\mathbf{x}_{i}^{l}+ g + Q\mathbf{v}i^{l+1} &= Q\left (\mathbf{x}{i}^{l} +\mathbf{v}i^{l+1} \right) +g \ &= Q \mathbf{x}{i}^{l+1} + g \end{align*} $

Concluding we showed that an $\mathrm{E}(n)$ transformation on the input set of points results in the same transformation on the output set of points such that $\mathbf{h}^{l+1}, Q \mathbf{x}^{l+1} + g, Q \mathbf{v}^{l+1} = \text{EGCL}[\mathbf{h}^{l}, Q \mathbf{x}^{l} + g, Q \mathbf{v}^{\text{init}}, \mathcal{E}]$ is satisfied.

C. Implementation details

Section Summary: This section explains the technical setup for the experiments, starting with the core components of the EGNN model, which include simple neural network layers for processing edges, coordinates, and nodes in a consistent way across all tests. For the dynamical systems experiments, it describes a modified 3D particle simulation dataset with thousands of trajectories for training and testing, along with details on building and training the EGNN alongside other models like GNNs and transformer networks using standard optimization techniques over many iterations. It then introduces the graph autoencoder experiments, using generated datasets like Community Small and Erdos-Renyi graphs, though specifics taper off.

In this Appendix section we describe the implementation details of the experiments. First, we describe those parts of our model that are the same across all experiments. Our EGNN model from Section 3 contains the following three main learnable functions.

The edge function $\phi_e$ (eq. 3) is a two layers MLP with two Swish non-linearities: Input $\xrightarrow{}$ LinearLayer() $\xrightarrow{}$ Swish() $\xrightarrow{}$ LinearLayer() $\xrightarrow{}$ Swish() $\xrightarrow{}$ Output.

The coordinate function $\phi_x$ (eq. 4) consists of a two layers MLP with one non-linearity: $\mathbf{m}_{ij}$ $\xrightarrow{}$ LinearLayer() $\xrightarrow{}$ Swish() $\xrightarrow{}$ LinearLayer() $\xrightarrow{}$ Output

The node function $\phi_h$ (eq. 6) consists of a two layers MLP with one non-linearity and a residual connection:

[$\mathbf{h}_i^{l}$, $\mathbf{m}_i$] $\xrightarrow{}$ LinearLayer() $\xrightarrow{}$ Swish() $\xrightarrow{}$ LinearLayer() $\xrightarrow{}$ Addition($\mathbf{h}^l_i$) $\xrightarrow{}$ $\mathbf{h}^{l+1}_i$

These functions are used in our EGNN across all experiments. Notice the GNN (eq. 2) also contains and edge operation and a node operation $\phi_e$ and $\phi_h$ respectively. We use the same functions described above for both the GNN and the EGNN such that comparisons are as fair as possible.

C.1. Implementation details for Dynamical Systems

Dataset

In the dynamical systems experiment we used a modification of the Charged Particle's N-body (N=5) system from [6]. Similarly to [8], we extended it from 2 to 3 dimensions customizing the original code from (https://github.com/ethanfetaya/NRI) and we removed the virtual boxes that bound the particle's positions. The sampled dataset consists of 3.000 training trajectories, 2.000 for validation and 2.000 for testing. Each trajectory has a duration of 1.000 timesteps. To move away from the transient phase, we actually generated trajectories of 5.000 time steps and sliced them from timestep $3.000$ to timestep $4.000$ (1.000 time steps into the future) such that the initial conditions are more realistic than the Gaussian Noise initialization from which they are initialized.

In our second experiment, we sweep from 100 to 50.000 training samples, for this we just created a new training partition following the same procedure as before but now generating 50.000 trajectories instead. The validation and test partition remain the same from last experiment.

Models

All models are composed of 4 layers, the details for each model are the following.

- EGNN: For the EGNN we use its variation that considers vector type inputs from Section 3.2. This variation adds the function $\phi_v$ to the model which is composed of two linear layers with one non-linearity: Input $\xrightarrow{}$ LinearLayer() $\xrightarrow{}$ Swish() $\xrightarrow{}$ LinearLayer() $\xrightarrow{}$ Output. Functions $\phi_e$, $\phi_x$ and $\phi_h$ that define our EGNN are the same than for all experiments and are described at the beginning of this Appendix C.

- GNN: The GNN is also composed of 4 layers, its learnable functions edge operation $\phi_e$ and node operation $\phi_h$ from We chose the same functions for both models to ensure a fair comparison. In the GNN case, the initial position $\mathbf{p}^0$ and velocity $\mathbf{v}^0$ from the particles is passed through a linear layer and inputted into the GNN first layer $\mathbf{h}^0$. The particle's charges are inputted as edge attributes $a_{ij} = c_i c_j$. The output of the GNN $\mathbf{h}^L$ is passed through a two layers MLP that maps it to the estimated position.

- Radial Field: The Radial Field algorithm is described in the Related Work Section 4, its only parameters are contained in its edge operation $\phi_{\mathrm{rf}}()$ which in our case is a two layers MLP with two non linearities Input $\xrightarrow{}$ LinearLayer() $\xrightarrow{}$ Swish() $\xrightarrow{}$ LinearLayer() $\xrightarrow{}$ Tanh $\xrightarrow{}$ Output. Notice we introduced a Tanh at the end of the MLP which fixes some instability issues that were causing this model to diverge in the dynamical system experiment. We also augmented the Radial Field algorithm with the vector type inputs modifications introduced in Section 3.2. In addition to the norms between pairs of points, $\phi_{\mathrm{rf}}()$ also takes as input the particle charges $c_i c_j$.

- Tensor Field Network: We used the Pytorch implementation from https://github.com/FabianFuchsML/se3-transformer-public. We swept over different hyper paramters, degree $\in$ 2, 3, 4, number of features $\in$ 12, 24, 32, 64, 128. We got the best performance in our dataset for degree 2 and number of features 32. We used the Relu activation layer instead of the Swish for this model since it provided better performance.

- SE(3) Transformers: For the SE(3)-Transformer we used code from https://github.com/FabianFuchsML/se3-transformer-public. Notice this implementation has only been validated in the QM9 dataset but it is the only available implementation of this model. We swept over different hyperparamters degree $\in$ 1, 2, 3, 4, number of features $\in$ 16, 32, 64 and divergence $\in$ 1, 2, along with the learning rate. We obtained the best performance for degree 3, number of features 64 and divergence 1. As in Tensor Field Networks we obtained better results by using the Relu activation layer instead of the Swish.

Other implementation details

In Table 2 all models were trained for 10.000 epochs, batch size 100, Adam optimizer, the learning rate was fixed and independently chosen for each model. All models are 4 layers deep and the number of training samples was set to 3.000.

C.2. Implementation details for Graph Autoneoders

Dataset

In this experiment we worked with Community Small [37] and Erdos&Renyi [38] generated datasets.

- Community Small: We used the original code from [37] (https://github.com/JiaxuanYou/graph-generation) to generate a Community Small dataset. We sampled 5.000 training graphs, 500 for validation and 500 for testing.

- Erdos&Renyi is one of the most famous graph generative algorithms. We used the "gnp_random_graph($M$, $p$)" function from (https://networkx.org/) that generates random graphs when povided with the number of nodes $M$ and the edge probability $p$ following the Erdos&Renyi model. Again we generated 5.000 graphs for training, 500 for validation and 500 for testing. We set the edge probability (or sparsity value) to $p=0.25$ and the number of nodes $M$ ranging from 7 to 16 deterministically uniformly distributed. Notice that edges are generated stochastically with probability $p$, therefore, there is a chance that some nodes are left disconnected from the graph, "gnp_random_graph($M$, $p$)" function discards these disconnected nodes such that even if we generate graphs setting parameters to $7 \leq M \leq 16$ and $p=0.25$ the generated graphs may have less number of nodes.

Finally, in the graph autoencoding experiment we also overfitted in a small partition of 100 samples (Figure 4) for the Erdos&Renyi graphs described above. We reported results for different $p$ values ranging from $0.1$ to $0.9$. For each $p$ value we generated a partition of 100 graphs with initial number of nodes between $7 \leq M \leq 16$ using the Erdos&Renyi generative model.

Models

All models consist of 4 layers, 64 features for the hidden layers and the Swish activation function as a non linearity. The EGNN is defined as explained in Section 3 without any additional modules (i.e. no velocity type features or inferring edges). The functions $\phi_e$, $\phi_x$ and $\phi_h$ are defined at the beginning of this Appendix C. The GNN (eq. 2) mimics the EGNN in terms that it uses the same $\phi_h$ and $\phi_e$ than the EGNN for its edge and node updates. The Noise-GNN is exactly the same as the GNN but inputting noise into the $\mathbf{h}0$ features. Finally the Radial Field was defined in the Related Related work Section 4 which edge's operation $\phi{\mathrm{rf}}$ consists of a two layers MLP: Input $\xrightarrow{}$ Linear() $\xrightarrow{}$ Swish() $\xrightarrow{}$ Linear() $\xrightarrow{}$ Output.

Other implementation details

All experiments have been trained with learning rate $10^{-4}$, batch size 1, Adam optimizer, weight decay $10^{-16}$, 100 training epochs for the 5.000 samples sized datasets performing early stopping for the minimum Binary Cross Entropy loss in the validation partition. The overfitting experiments were trained for 10.000 epochs on the 100 samples subsets.

C.3. Implementation details for QM9

For QM9 [5] we used the dataset partitions from [25]. We imported the dataloader from his code repository (https://github.com/risilab/cormorant) which includes his data-preprocessing. Additionally all properties have been normalized by substracting the mean and dividing by the Mean Absolute Deviation.

Our EGNN consists of 7 layers. Functions $\phi_e$ and $\phi_h$ are defined at the beginning of this Appendix C. Additionally, we use the module $\phi_{inf}$ presented in Section 3.3 that infers the edges . This function $\phi_{inf}$ is defined as a linear layer followed by a sigmoid: Input $\xrightarrow{}$ Linear() $\xrightarrow{}$ sigmoid() $\xrightarrow{}$ Output. Finally, the output of our EGNN $\mathbf{h}^L$ is forwarded through a two layers MLP that acts node-wise, a sum pooling operation and another two layers MLP that maps the averaged embedding to the predicted property value, more formally: $\mathbf{h}^L$ $\xrightarrow{}$ Linear() $\xrightarrow{}$ Swish() $\xrightarrow{}$ Linear() $\xrightarrow{}$ Sum-Pooling() $\xrightarrow{}$ Linear() $\xrightarrow{}$ Swish() $\xrightarrow{}$ Linear $\xrightarrow{}$ Property. The number of hidden features for all model hidden layers is 128.

We trained each property individually for a total of 1.000 epochs, we used Adam optimizer, batch size 96, weight decay $10^{-16}$, and cosine decay for the learning rate starting at at a lr= $5\cdot10^{-4}$ except for the Homo, Lumo and Gap properties where its initial value was set to $10^{-3}$.

D. Further experiments

Section Summary: This section describes additional tests on a graph autoencoder, which compresses and rebuilds network data. It compares the accuracy of reconstructed graphs across three models—GNN, Noise-GNN, and EGNN—using two datasets as the data compression size shrinks to small levels like 4, 6, or 8 dimensions. All models struggle with the tiniest sizes, but the EGNN model clearly performs better as the size increases.

D.1. Graph Autoencoder

In this section we present an extension of the Graph Autoencoder experiment Section 5.2. In Table 4 we report the approximation error of the reconstructed graphs as the embedding dimensionality $n$ is reduced $n \in {4, 6, 8 }$ in the Community Small and Erdos&Renyi datasets for the GNN, Noise-GNN and EGNN models. For small embedding sizes ($n=4$) all methods perform poorly, but as the embedding size grows our EGNN significantly outperforms the others.

E. Sometimes invariant features are all you need.

Section Summary: This section explains that when working with just the positions of points in space, the distances between them fully capture the shape and structure without needing more complex details like directions or angles. These distances stay the same even if the entire set of points is rotated or shifted, making them a reliable and simple way to describe geometry. The proof shows that equal distances between corresponding points in two sets mean the sets are identical in shape, just possibly repositioned in space, allowing basic invariant features to outperform fancier network designs.

Perhaps surprisingly we find our EGNNs outperform other equivariant networks that consider higher-order representations. In this section we prove that when only positional information is given (i.e. no velocity-type features) then the geometry is completely defined by the invariant distance norms in-between points, without loss of relevant information. As a consequence, it is not necessary to consider higher-order representation types of the relative distances, not even the relative differences as vectors. To be precise, note that these invariant features still need to be permutation equivariant, they are only $\mathrm{E}(n)$ invariant.

To be specific, we want to show that for a collection of points ${\mathbf{x}i}{i=1}^M$ the norm of in-between distances $\ell_2(\mathbf{x}_i, \mathbf{x}_j)$ are a unique identifier of the geometry, where collections separated by an $\mathrm{E}(n)$ transformations are considered to be identical. We want to show invariance of the norms under $\mathrm{E}(n)$ transformations and uniqueness: two point collections are identical (up to $\mathrm{E}(n)$ transform) when they have the same distance norms.

Invariance. Let ${\mathbf{x}_i}$ be a collection of $M$ points where $\mathbf{x}_i \in \mathbb{R}^n$ and the $\ell_2$ distances are $\ell_2(\mathbf{x}_i, \mathbf{x}_j)$. We want to show that all $\ell_2(\mathbf{x}_i, \mathbf{x}_j)$ are unaffected by $\mathrm{E}(n)$ transformations.

Proof. Consider an arbitrary $\mathrm{E}(n)$ transformation $\mathbb{R}^n \to \mathbb{R}^n : \mathbf{x} \mapsto Q \mathbf{x} + t$ where $Q$ is orthogonal and $t \in \mathbb{R}^n$ is a translation. Then for all $i, j$:

$ \begin{align*} \ell_2(Q \mathbf{x}_i + t, Q \mathbf{x}_j + t) &= \sqrt{} (Q \mathbf{x}_i + t - [Q \mathbf{x}_j + t])^T(Q \mathbf{x}_i + t - [Q \mathbf{x}_j + t]) = \sqrt{} (Q \mathbf{x}_i - Q \mathbf{x}_j)^T(Q \mathbf{x}_i - Q \mathbf{x}_j) \ &= \sqrt{} (\mathbf{x}_i - \mathbf{x}_j)^T Q^T Q (\mathbf{x}_i - \mathbf{x}_j) = \sqrt{} (\mathbf{x}_i - \mathbf{x}_j)^T (\mathbf{x}_i - \mathbf{x}_j) = \ell_2(\mathbf{x}_i, \mathbf{x}_j) \end{align*} $

This proves that the $\ell_2$ distances are invariant under $\mathrm{E}(n)$ transforms.

Uniqueness. Let ${\mathbf{x}_i}$ and ${\mathbf{y}_i}$ be two collection of $M$ points each where all in-between distance norms are identical, meaning $\ell_2(\mathbf{x}_i, \mathbf{x}_j) = \ell_2(\mathbf{y}_i, \mathbf{y}_j)$. We want to show that $\mathbf{x}_i = Q \mathbf{y}_i + t$ for some orthogonal $Q$ and translation $t$, for all $i$.

Proof. Subtract $\mathbf{x}_0$ from all ${\mathbf{x}_i}$ and $\mathbf{y}_0$ from all ${\mathbf{y}_i}$, so $\tilde{\mathbf{x}}_i = \mathbf{x}_i - \mathbf{x}_0$ and $\tilde{\mathbf{y}}_i = \mathbf{y}_i - \mathbf{y}_0$. As proven above, since translation is an $\mathrm{E}(n)$ transformation the distance norms are unaffected and:

$ \ell_2(\tilde{\mathbf{x}}_i, \tilde{\mathbf{x}}_j) = \ell_2(\mathbf{x}_i, \mathbf{x}_j) = \ell_2(\mathbf{y}_i, \mathbf{y}_j) = \ell_2(\tilde{\mathbf{y}}_i, \tilde{\mathbf{y}}_j). $

So without loss of generality, we may assume that $\mathbf{x}_0 = \mathbf{y}_0 = \mathbf{0}$. As a direct consequence $|| \mathbf{x}_i||_2 = || \mathbf{y}_i||_2$. Now writing out the square:

$ \mathbf{x}_i^T \mathbf{x}_i - 2 \mathbf{x}_i^T \mathbf{x}_j + \mathbf{x}_j^T \mathbf{x}_j = || \mathbf{x}_i - \mathbf{x}_j||_2^2 = || \mathbf{y}_i - \mathbf{y}_j||_2^2 = \mathbf{y}_i^T \mathbf{y}_i - 2 \mathbf{y}_i^T \mathbf{y}_j + \mathbf{y}_j^T \mathbf{y}_j $

And since $|| \mathbf{x}_i||_2 = || \mathbf{y}_i||_2$, it follows that $\mathbf{x}_i^T \mathbf{x}_j = \mathbf{y}_i^T \mathbf{y}_j$ or equivalently written as dot product $\langle \mathbf{x}_i, \mathbf{x}_j\rangle = \langle \mathbf{y}_i, \mathbf{y}_j \rangle$. Notice that this already shows that angles between pairs of points are the same.

At this moment, it might already be gnp_random_graph($M$, $p$) that the collections of points are indeed identical. To finalize the proof formally we will construct a linear map $A$ for which we will show that (1) it maps every $\mathbf{x}_i$ to $\mathbf{y}_i$ and (2) that it is orthogonal. First note that from the angle equality it follows immediately that for every linear combination:

$ || \sum_i c_i \mathbf{x}_i ||_2 = || \sum_i c_i \mathbf{y}_i ||_2 \quad (*). $

Let $V_x$ be the linear span of ${\mathbf{x}i}$ (so $V_x$ is the linear subspace of all linear combinations of ${\mathbf{x}i}$). Let ${\mathbf{x}{i_j}}{j=1}^d$ be a basis of $V_x$, where $d \leq n$. Recall that one can define a linear map by choosing a basis, and then define for each basis vector where it maps to. Define a linear map $A$ from $V_x$ to $V_y$ by the transformation from the basis $\mathbf{x}{i_j}$ to $\mathbf{y}{i_j}$ for $j=1, ..., d$. Now pick any point $\mathbf{x}_i$ and write it in its basis $\mathbf{x}i = \sum_j c_j \mathbf{x}{i_j} \in V_x$. We want to show $A \mathbf{x}_i = \mathbf{y}_i$ or alternatively $|| \mathbf{y}_i - A \mathbf{x}i||2 = 0$. Note that $A \mathbf{x}i = A \sum_j c_j \mathbf{x}{i_j} = \sum_j c_j A \mathbf{x}{i_j} = \sum_j c_j \mathbf{y}{i_j}$. Then:

$ \begin{align*} || \mathbf{y}i - \sum_j c_j \mathbf{y}{i_j}||_2^2 &= \langle \mathbf{y}i, \mathbf{y}i \rangle - 2 \langle \mathbf{y}i, \sum_j c_i \mathbf{y}{i_j} \rangle + \langle \sum_j c_i \mathbf{y}{i_j}, \sum_j c_i \mathbf{y}{i_j} \rangle \ &\stackrel{()}{=} \langle \mathbf{x}i, \mathbf{x}i \rangle - 2 \langle \mathbf{x}i, \sum_j c_i \mathbf{x}{i_j} \rangle + \langle \sum_j c_i \mathbf{x}{i_j}, \sum_j c_i \mathbf{x}{i_j} \rangle = \langle \mathbf{x}_i, \mathbf{x}_i \rangle - 2 \langle \mathbf{x}_i, \mathbf{x}_i \rangle + \langle \mathbf{x}_i, \mathbf{x}_i \rangle = 0. \end{align} $

Thus showing that $A \mathbf{x}_i = \mathbf{y}_i$ for all $i = 1, \ldots, M$, proving (1). Finally we want to show that $A$ is orthogonal, when restricted to $V_x$. This follows since:

$ \langle A \mathbf{x}{i_j}, A \mathbf{x}{i_j} \rangle = \langle \mathbf{y}{i_j}, \mathbf{y}{i_j} \rangle = \langle \mathbf{x}{i_j}, \mathbf{x}{i_j} \rangle $